The company was millions of dollars in debt and on the verge of bankruptcy when Fred took his gamble, but the winnings set it on a path to a place where, in 2018, it made revenues of $65.4 billion.

Fred’s business was FedEx, the multinational courier delivery service.

Stories about huge risks paying off despite long odds are exciting. To many, the ability or will to take risks demonstrates courage, or even some kind of shrewdness of thought.

Of course the outcome could just have easily gone the other way and we’d never have heard Fred’s tale. Who knows how many others have, in similar circumstances, risked and lost it all? If we heard those stories we might consider their actions reckless or even foolish.

Risks are easier to judge in hindsight, but in the moments where we decide to take a risk or play it safe, there are deep-seated, emotional and evolutionary forces at play.

In fact, even those of us who have made a career out of assessing risk may have less control over our decisions than we might like to think — as we learned when we spoke to risk expert, Dr Rick Norris.

How we judge risk

Imagine you’re standing outside a room. The door is open.

In the room there’s a briefcase containing £50,000 in cash.

The door will close automatically in 30 seconds and can never be opened again. If you don’t go in, you’ll miss out on the money. If you go in but fail to make it back out in time, you’ll be trapped forever.

Would you take the risk of retrieving the cash?

It’s fair to say that most of us would judge 30 seconds as plenty of time to walk into a room, pick up a briefcase and leave. The risk of being trapped is pretty small, and the consequences of walking away with the cash would be hugely positive.

In short, most of us would probably go for it.

We wouldn’t feel the need to confer with anyone else about our decision, and we wouldn’t need the balance of the risk explaining to us — it’s just something we understand.

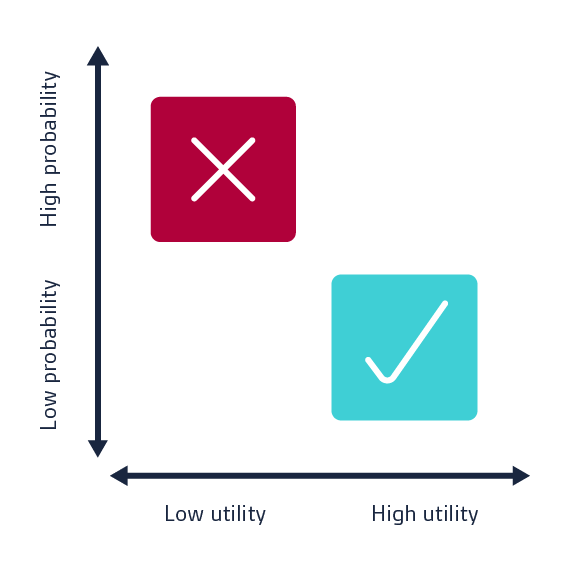

This process of balancing the probability of an event happening with its consequences (referred to as ‘utility’ in psychology) is key.

If we think about it algebraically, with X representing utility and Y representing probability, it looks like this:

· Where X is greater than Y, the risk may not be worth taking.

· Where Y is greater than X, the risk may be worth taking.

· Where X is equal or close to Y (or X is unknowable) the decision becomes more difficult.

In our FedEx example, the utility was $27,000 — or enough cash to keep FedEx’s lights on for another week.

The probability of Fred Smith losing his last $5,000 on blackjack were pretty significant. According to online casino 777.com, players can expect to hit a blackjack only every 21 hands.

Whether he felt the utility justified the risk, or didn’t understand/care about the probability, he decided to take the risk anyway.

The great filter

Dr Rick Norris is a Chartered Psychologist and the Founder of Mind Health Development. Dr Norris specialises in stress, anxiety and depression. He’s also an expert in risk and risk aversion.

We spoke to Dr Norris about the biological and psychological processes that handle risk, along with what makes some people more risk averse than others and whether it’s possible for a risk averse person to become a risk taker.

It all happens, as Dr Norris explains, in your brain’s Reticular Activating System (RAS).

In simple terms, the RAS is a bundle of nerves that lives at our brainstem and acts as a filter for information.

If you’re reading this in a busy office, you might be surrounded by people talking. Your RAS is what allows you to tune out all the chatter and focus on what you’re reading.

It’s also the part of your brain responsible for something called Baader-Meinhof Phenomenon — where soon after you learn something new, you start hearing it ‘coincidentally’ cropping up in everyday life.

If, after reading this article, you hear Baader-Meinhof mentioned elsewhere, it’ll be your RAS at work.

Flight risk

Dr Norris explains how your RAS processes information to help you make decisions.

“The Reticular Activating System operates as a filter. It notices certain things and draws our attention to them. When people who are more risk averse, a filter is more likely to draw their attention to the things that can go wrong.

“We call them threat-sensitive people. Threat-sensitive people are more risk averse, and they’re also more prone to pessimism.”

Dr Norris worked with the National Air Traffic Service and found huge numbers of its staff to be very risk averse. It makes sense, since the utility they have to balance against probability involves people’s lives.

Though the probability of an air traffic incident is relatively small, the utility or consequence if an incident were to happen is massively negative. On balance, it’s no surprise that an air traffic controller would be a risk averse person.

Probability versus plausibility

Whatever risk is being assessed, probability and utility are fairly subjective. For example, a pessimist might over-estimate the probability of something happening, or at least not look at the probability entirely objectively.

Meanwhile, an optimist might judge the utility to be more worth the risk than it objectively is. The point being, our personalities and outlooks influence our approach to risk — regardless of the actual probability and utility at play.

Whenever we make decisions, we make them with the entire weight of our life experiences. If someone has taken a risk in the past that didn’t pay off, they might err on the side of caution in future — becoming more risk averse.

When this happens, the decision maker is actually balancing utility against plausibility, rather than probability. In essence, they’re asking themselves whether something could happen, rather than if something will happen.

If FedEx’s Fred Smith had previously gambled and lost $5,000 on blackjack, he might have thought twice about betting his company’s money. But losing money in a game of blackjack in the past doesn’t change the probability of losing in the future.

It might be more plausible to you, but it’s no more or less probable.

Dr Norris said: “After 9/11, loads and loads of people stopped taking internal flights in America. My argument would be: this is a fantastic time to take flights, your safety is never going to be greater. But the emotional part of people’s brains was saying: ‘Well, we’ve seen it on the TV, we know what happens — best not to fly’.”

Nature versus nurture

While risk aversion might seem like a learned behaviour, there is evidence to suggest it could be innate, at least in part. Speculative evidence has suggested that, for example, women are more risk averse than men.

There’s little doubt that our experiences shape our outlook and attitude to risk, but the part of our brain that reacts emotionally — the limbic system — is five times stronger than the frontal system, which reacts rationally.

The limbic system is ancient and reinforced by 200,000 years of human survival, so it’s not something we can easily ignore and it can cloud our judgement when we’re assessing risk.

Dr Norris explained that risk aversion served an evolutionary purpose, with early humans tending to overestimate probability in relation to utility.

For example, the rustling in the bushes might be an animal that will feed the tribe for the next few days, or it could be rival tribesmen, dangerous animals or other threat. Survival was too important to risk.

We’ve carried these instincts with us for millennia and even today, in 2019, they’re hard- wired. So while a risk averse person can learn to become more of a risk taker and vice-versa, it’s extremely difficult.

Under pressure

For most of us, these risk-assessment processes are infrequent, and hopefully not too serious. For some, however, risk is all in a day’s work.

Risk management professionals are employed to spot, analyse and validate potential and emerging risks. They also research and develop ways to mitigate those risks. And at every stage, they’re being asked to balance probability against utility.

For example, they might be asked to assess the risk of being fined for not complying with a piece of regulation against the costs of putting systems and processes in place to comply.

They might be asked to look at the cost and likelihood of the potential fine versus the cost of compliance and to make a decision. These decisions can involve large sums of money, their company’s reputation and elements of personal liability.

It means they’re making risk assessments under real pressure and, as we’ve discussed, with all the baggage of their experiences and their grumbling limbic systems to contend with.

It’s little wonder that you’ll find some risk management professionals have a much smaller appetite for risk than others, even if it could be to their organisation’s detriment.

But whatever their level of seniority, personal liability or personality — you’ll find the same biological and psychological processes whirring away inside their heads.

Getting c-suite buy-in

For some risk professionals, convincing people in leadership roles of the need to go above and beyond their minimum regulatory requirements can be a challenge.

Asking for an investment that will at best minimise disruption and at worst simply maintain the status quo is a hard sell — but understanding how people’s perception of risk differs can be a powerful enabler.

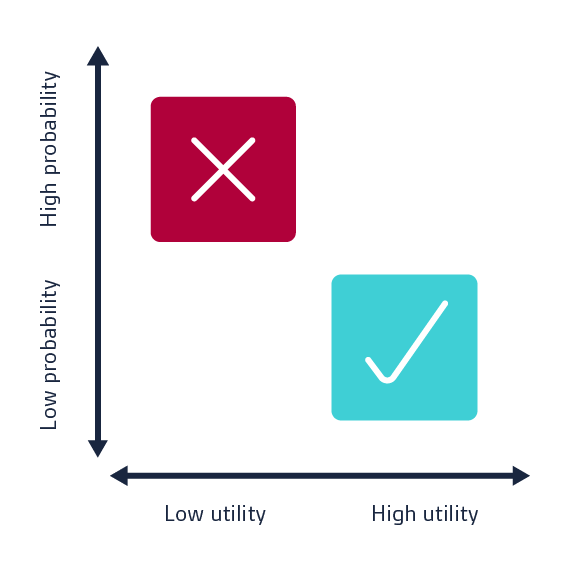

For example, CEO’s tend to be less risk averse and are typically more optimistic. As we know, optimists and pessimists approach risk from different vantage points, and so convincing them of something takes different approaches.

When an event has a 1 in 3 probability of happening, the risk professional will naturally highlight the 33% risk, whereas the CEO might see the 67% chance of it not happening.

In that case, the risk manager might be better off highlighting the potential utility of the event — the personal liability, legal action or reputational damage it would plausibly cause (perhaps alluding to real examples) rather than sticking to the probability argument.

Understanding and considering how different people understand and approach risk could make all the difference to your next appeal to the C-suite.